What is a rubric?

What is a rubric? It’s a rule for converting unstructured responses on an assessment into structured data that we can use psychometrically.

Why do we need rubrics?

Measurement is a quantitative endeavor. In psychometrics, we are trying to measure things like knowledge, achievement, aptitude, or skills. So we need a way to convert qualitative data into quantitative data. We can still keep the qualitative data on hand for certain uses, but typically need the quantitative data for the primary use. For example, writing essays in school will need to be converted to a score, but the teacher might also want to talk to the student to provide a learning opportunity.

A rubric is a defined set of rules to convert open-response items like essays into usable quantitative data, such as scoring the essay 0 to 4 points.

How many rubrics do I need?

In some cases, a single rubric will suffice. This is typical in mathematics, where the goal is a single correct answer. In writing, the goal is often more complex. You might be assessing writing and argumentative ability at the same time you are assessing language skills. For example, you might have rubrics for spelling, grammar, paragraph structure, and argument structure – all on the same essay.

Examples

Spelling rubric for an essay

| Points | Description |

|---|---|

| 0 | Essay contains 5 or more spelling mistakes |

| 1 | Essay contains 1 to 4 spelling mistakes |

| 2 | Essay does not contain any spelling mistakes |

Argument rubric for an essay

“Your school is considering the elimination of organized sports. Write an essay to provide to the School Board that provides 3 reasons to keep sports, with a supporting explanation for each.”

| Points | Description |

|---|---|

| 0 | Student does not include any reasons with explanation (includes providing 3 reasons but no explanations) |

| 1 | Student provides 1 reason with a clear explanation |

| 2 | Student provides 2 reasons with clear explanations |

| 3 | Student provides 3 reasons with clear explanations |

Answer rubric for math

| Points | Description |

|---|---|

| 0 | Student provides no response or a response that does not indicate understanding of the problem. |

| 1 | Student provides a response that indicates understanding of the problem, but does not arrive at correct answer OR provides the correct answer but no supporting work. |

| 2 | Student provides a response with the correct answer and supporting work that explains the process. |

How do I score tests with a rubric?

Well, the traditional approach is to just take the integers supplied by the rubric and add them to the number-correct score. This is consistent with classical test theory, and therefore fits with conventional statistics such as coefficient alpha for reliability and Pearson correlation for discrimination. However, the modern paradigm of assessment is item response theory, which analyzes the rubric data much more deeply and applies advanced mathematical modeling like the generalized partial credit model (Muraki, 1992; resources on that here and here).

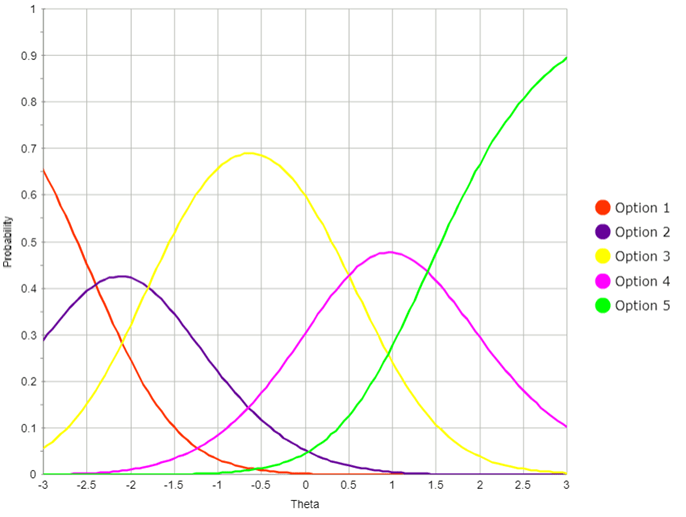

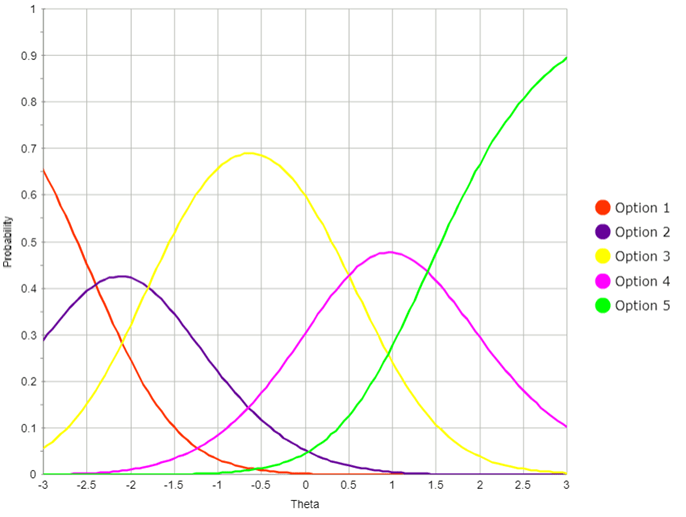

An example of this is below. Imagine that you have an essay which is scored 0-4 points. This graph shows the probability of earning each point level, as a function of total score (Theta). Someone who is average (Theta=0.0) is likely to get 2 points, the yellow line. Someone at Theta=1.0 is likely to get 3 points. Note that the middle curves are always bell-shaped while the ones on the end go up to an upper asymptote of 1.0. That is, the smarter the student, the more likely they are to get 4 out of 4 points, but the probability of that can never go above 100%, obviously.

How can I efficiently implement a scoring rubric?

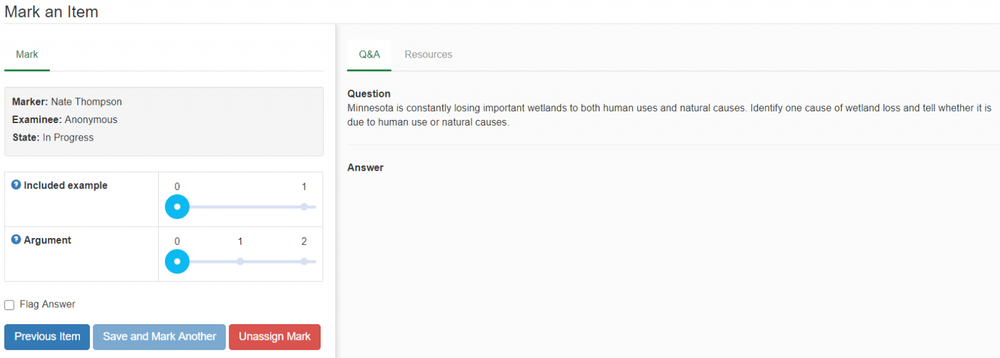

It is much easier to implement a scoring rubric if your online assessment platform supports them in an online marking module, especially if the platform already has integrated psychometrics like the generalized partial credit model. Below is an example of what an online essay marking system would look like, allowing you to efficiently implement rubrics. It should have advanced functionality, such as allowing multiple rubrics per item, multiple raters per response, anonymity, and more.

What about automated essay scoring?

You also have the option of using automated essay scoring; once you have some data from human raters on rubrics, you can train machine learning models to help. Unfortunately, the world is not yet to the state where we have a droid that you can just feed a pile of student papers to grade!

Nathan Thompson, PhD

Latest posts by Nathan Thompson, PhD (see all)

- What is a T score? - April 15, 2024

- Item Review Workflow for Exam Development - April 8, 2024

- Likert Scale Items - February 9, 2024